|

Sijie (Ada) Cheng

I'm a second-year Ph.D. candidate at the School of Computer Science and Technology, Tsinghua University , Beijing, China. I am advised by Prof. Yang Liu at THUNLP and Institute for AI Industry Research (AIR). Previously, I received my Master's degree from Fudan University in 2023, advised by Prof. Yanghua Xiao at Knowledge Work Lab. I am a recipient of several awards, including China Association for Science and Technology’s Young Talents Project, Ph.D. Program - China Computer Federation (中国科协青年人才托举工程博士生专项计划-依托中国计算机学会), Outstanding Master’s Thesis Award of the Shanghai Computer Society (上海市计算机学会优秀硕士学位论文奖), National Scholarship (国家奖学金), President Award (院长奖学金) in Institute for AI Industry Research @ Tsinghua University, Outstanding Graduate Student (优秀毕业生) in Shanghai, MFM-EAI Workshop@ICML 2024 Outstanding Paper.

👓 Recruiting full-time employees and interns for AI in smart glasses, and Looking for scientific research cooperation for egocentric foundation models.

Email /

Resume /

Google Scholar /

Github

|

|

Research: Egocentric Foundation Model represents the next generation of Foundation Models

I am broadly interested in Egocentric Multi-modal Large Language Models for Embodied AI, aiming to create systems that see, think, and act like humans from a first-person perspective.

- Egocentric Understanding: Understanding the observation and interaction from the first-person perspective in human daily activities, as in EgoThink | VidEgoThink.

- On-Device Model Training: Training foundation models to further deploy on wearable devices or autonomous robots, as in OpenChat | ConvLLaVA | IVM.

- Implicit knowledge in Pre-trained Models: Exploring and analyzing the inherent knowledge of Pre-trained Models, as in FedGEMs | StableToolBench | Explanation | Taxonomy | Commonsense.

|

Selected Publications

A full list of publications is here. (* indicates equal contribution.)

|

|

|

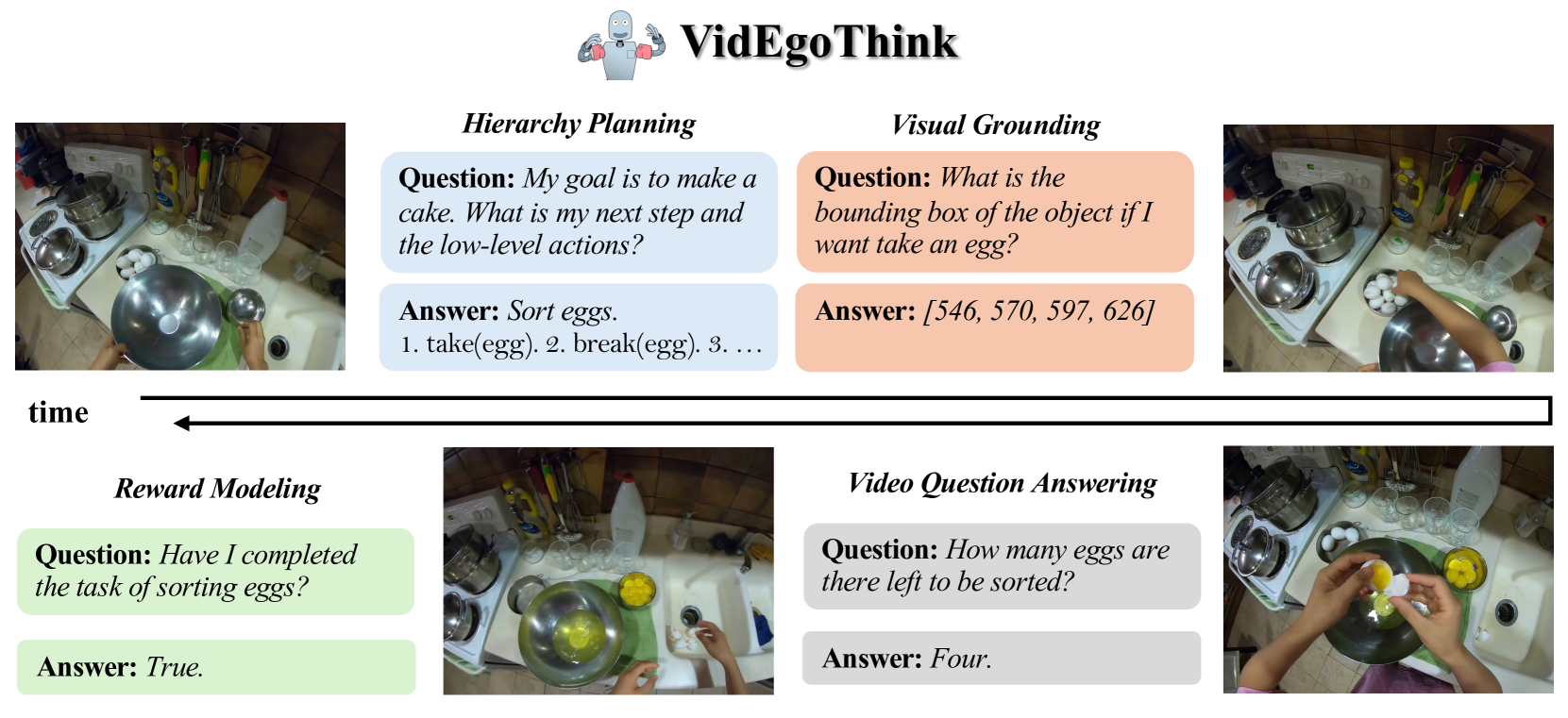

VidEgoThink: Assessing Egocentric Video Understanding Capabilities for Embodied AI

Sijie Cheng,

Kechen Fang*,

Yangyang Yu*,

Sicheng Zhou*,

Bohao Li,

Ye Tian,

Tingguang Li,

Lei Han,

Yang Liu

arXiv, 2024 (Huggingface Daily Paper Top-1)

arXiv

|

|

|

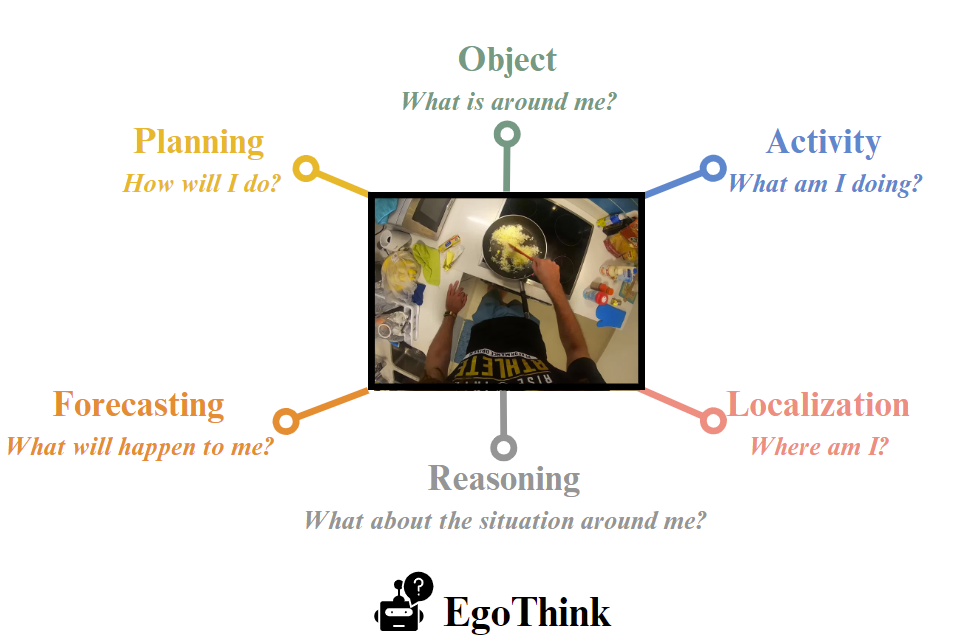

EgoThink: Evaluating First-Person Perspective Thinking Capability of

Vision-Language Models

Sijie Cheng*,

Zhicheng Guo*,

Jingwen Wu*,

Kechen Fang,

Peng Li,

Huaping Liu,

Yang Liu

CVPR, 2024 (Highlights)

Homepage

/

arXiv

/

GitHub

/

Dataset

/

Leaderboard

|

|

|

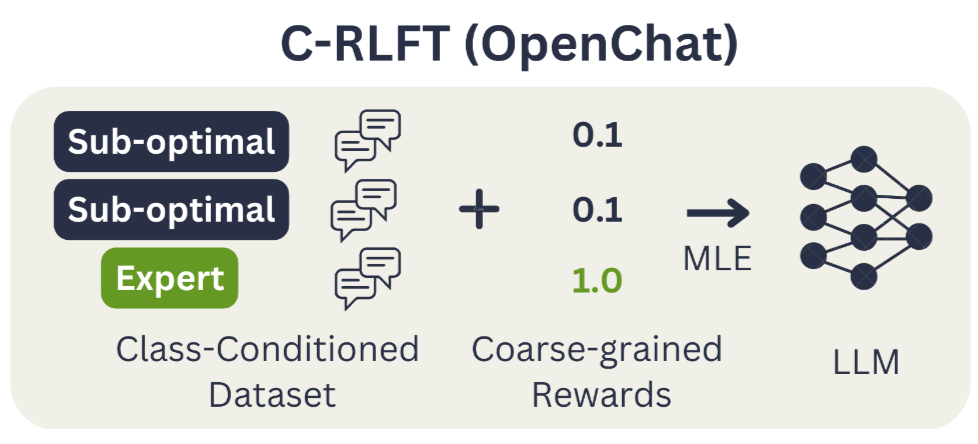

OpenChat: Advancing Open-source Language Models with Mixed-Quality Data

Guan Wang*,

Sijie Cheng*,

Xianyuan Zhan,

Xiangang Li,

Song Sen,

Yang Liu

ICLR, 2024 (5.2k+ GitHub Stars, 100k+ Huggingface Downloads)

arXiv

|

|

|

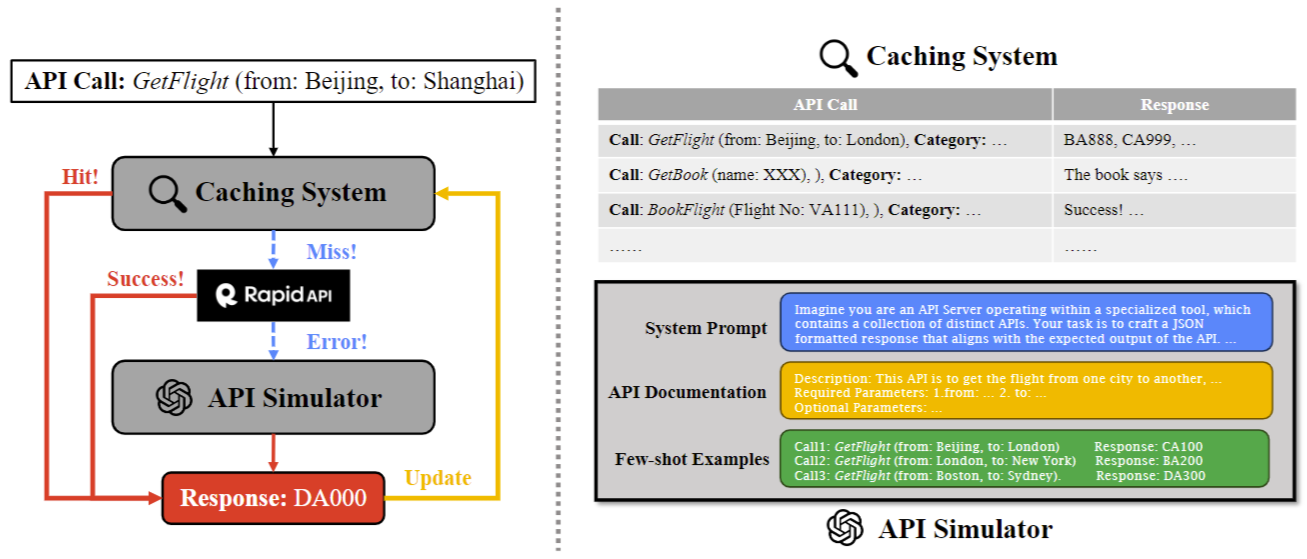

StableToolBench: Towards Stable Large-Scale Benchmarking on Tool Learning of Large Language Models

Zhicheng Guo,

Sijie Cheng,

Hao Wang,

Shihao Liang,

Yujia Qin,

Peng Li,

Zhiyuan Liu,

Maosong Sun,

Yang Liu

ACL, 2024 (100+ GitHub Stars)

project page

/

arXiv

|

|

|

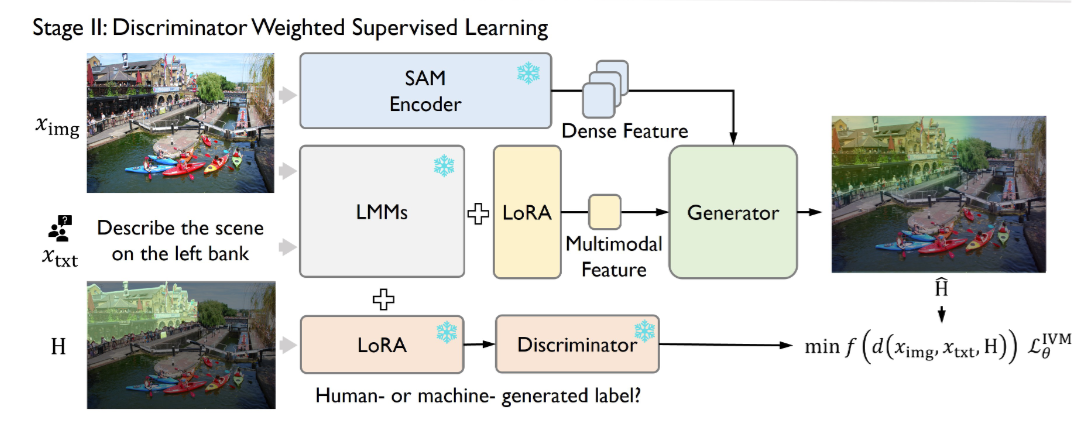

Instruction-Guided Visual Masking

Jinliang Zheng*,

Jianxiong Li*,

Sijie Cheng,

Yinan Zheng,

Jiaming Li,

Jihao Liu,

Yu Liu,

Jingjing Liu,

Xianyuan Zhan,

NeurIPS, 2024 (ICML 2024 MFM-EAI Workshop Outstanding Paper)

arXiv

|

|

|

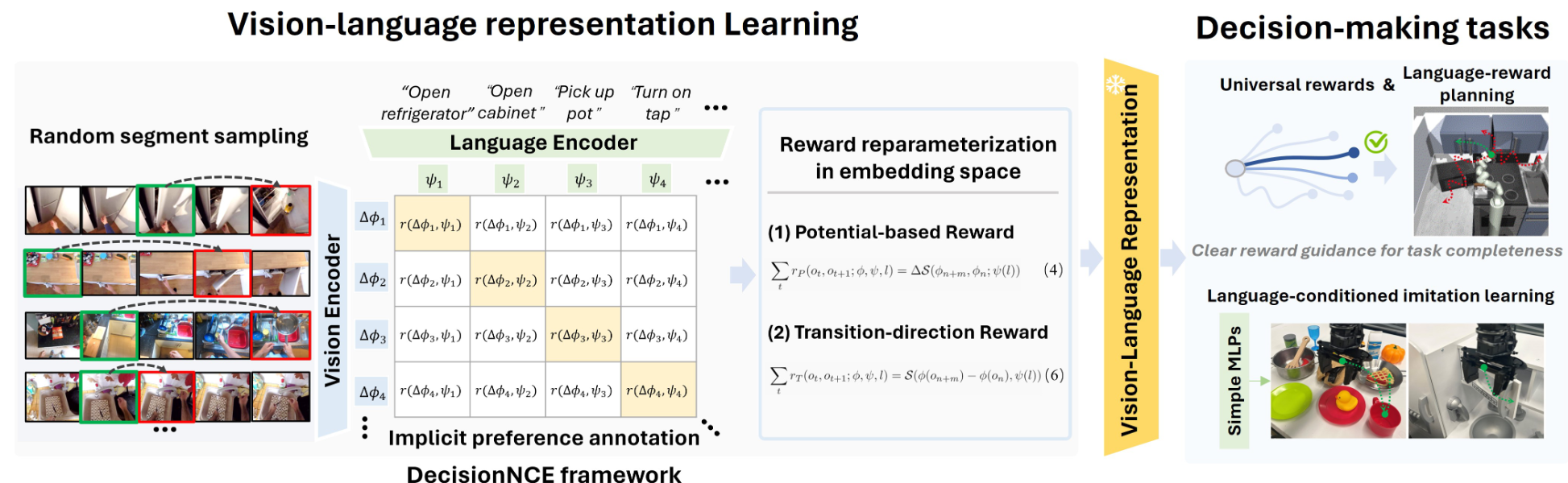

DecisionNCE: Embodied Multimodal Representations via Implicit Preference Learning

Jianxiong Li*,

Jinliang Zheng*,

Yinan Zheng,

Liyuan Mao,

Xiao Hu,

Sijie Cheng,

Haoyi Niu,

Jihao Liu,

Yu Liu,

Jingjing Liu,

Yaqin Zhang

Xianyuan Zhan,

ICML, 2024 (ICML 2024 MFM-EAI Workshop Outstanding Paper)

arXiv

|

|

|

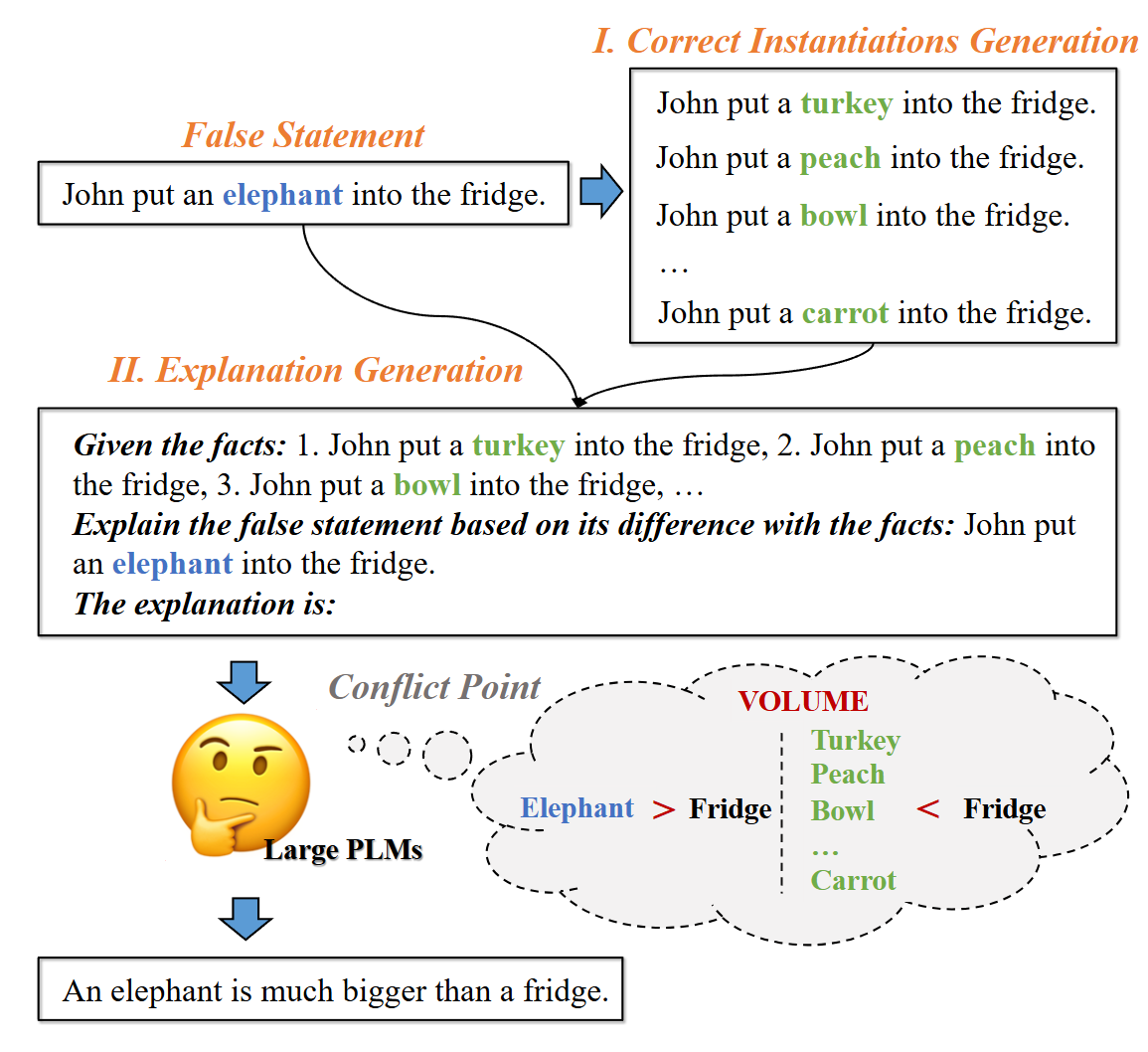

Unsupervised Explanation Generation via Correct Instantiations

Sijie Cheng,

Zhiyong Wu,

Jiangjie Chen,

Zhixing Li,

Yang Liu,

Lingpeng Kong

AAAI, 2023 (Oral)

arXiv

|

|

|

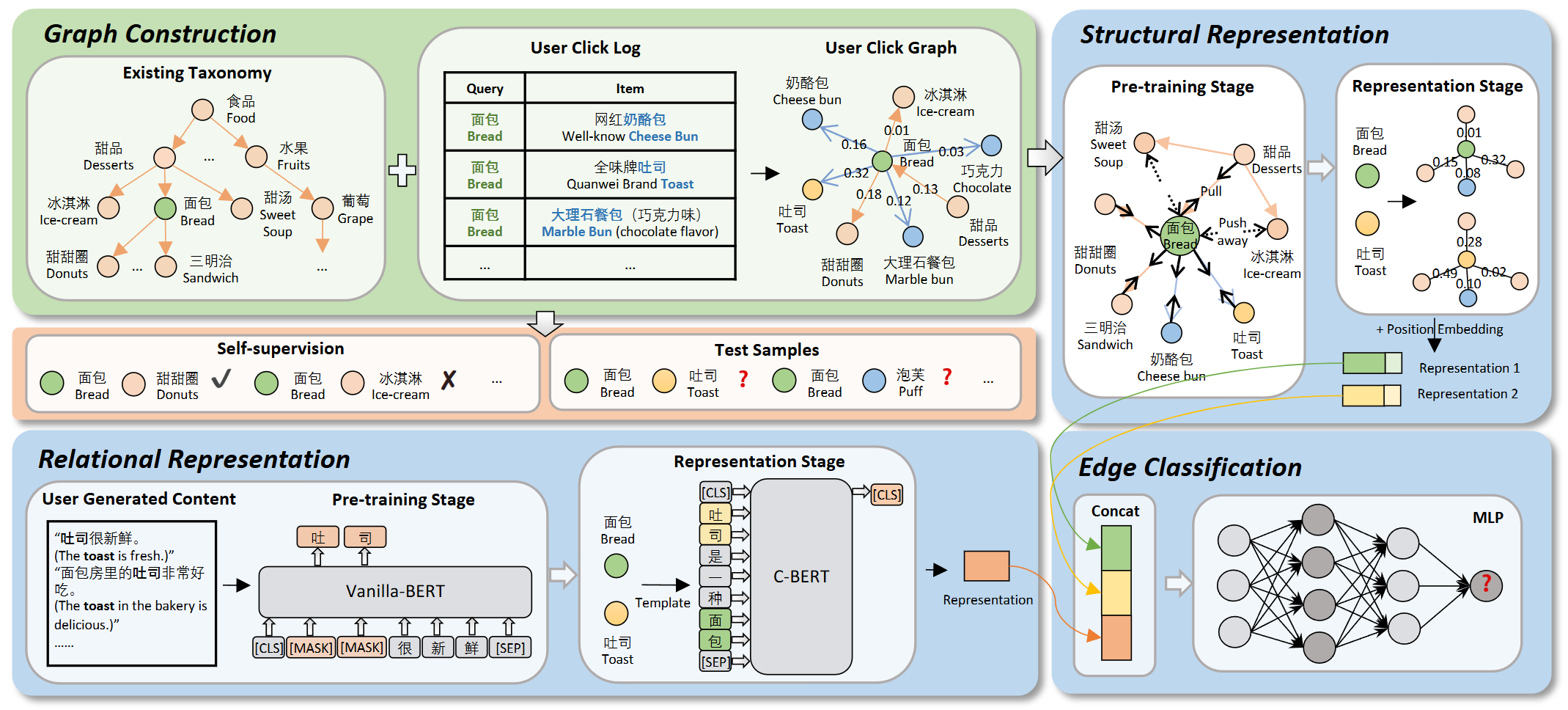

Learning What You Need from What You Did: Product Taxonomy Expansion with User Behaviors Supervision

Sijie Cheng,

Zhouhong Gu,

Bang Liu,

Rui Xie,

Wei Wu,

Yanghua Xiao,

ICDE, 2022 (Authorized National Invention Patent)

arXiv

|

|

|

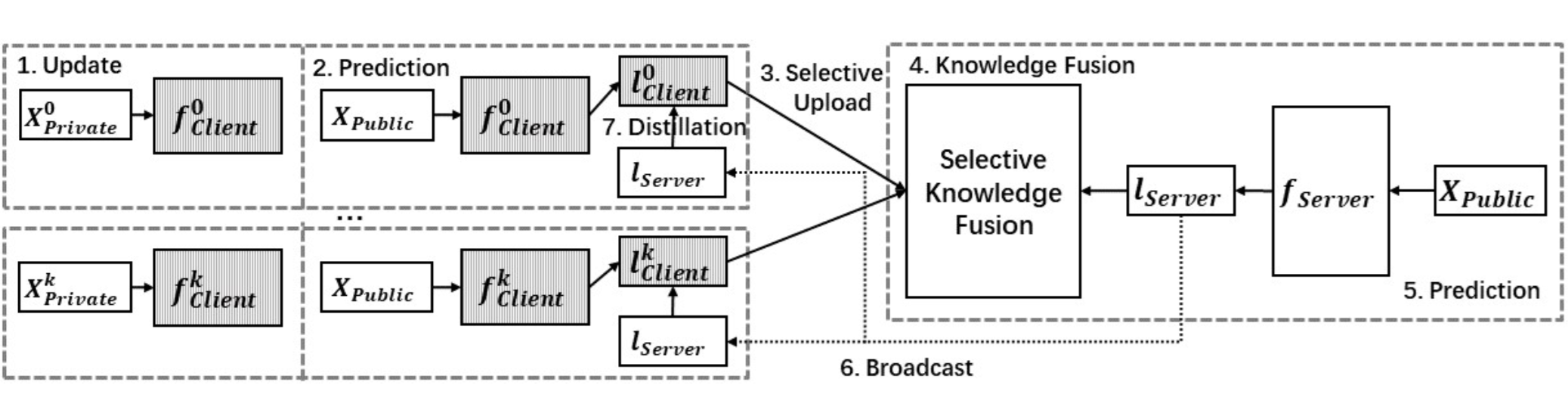

FedGEMS: Federated Learning of Larger Server Models via Selective Knowledge Fusion

Sijie Cheng,

Jingwen Wu,

Yanghua Xiao,

Yang (Veronica) Liu,

Yang Liu

Google Workshop on Federated Learning and Analytics, 2022 (Authorized National Invention Patent)

arXiv

|

-

Foundation Model Group, Rayneo - Intern (Jan. 2025 - Present)

Manager: Hongwei Li (CEO)

-

Robotics X, Tencent - Research Intern (Jun. 2024 - Dec. 2024)

Manager: Lei Han, Peers: Tingguang Li, Ye Tian

-

Pre-training Group, 01.AI Company - Research Intern (Aug. 2023 - Mar. 2024)

Manager: Xiangang Li, Peers: Wenhao Huang, Xiang Yue

-

Investment Department, Sinovation Ventures - Investment Intern (Feb. 2023 - Dec. 2023)

Manager: Bobing Ren

-

Natural Language Processing Group, Shanghai AI Lab - Research Intern (Mar. 2022 - Dec. 2022)

Manager: Prof. Lingpeng Kong, Peer: Zhiyong Wu

-

Institute for AI Industry Research, Tsinghua University - Research Intern (Jun. 2021 - Aug. 2023)

Managers: Prof. Yang Liu, Yang (Veronica) Liu

-

Natural Language Understanding Group, Meituan - Research Intern (Nov. 2020 - Jun. 2021)

Manager: Rui Xie

-

Text Intelligence Lab, Westlake University - Research Intern (Sep. 2019 - Sep. 2020)

Manager: Prof. Yue Zhang, Peer: Leyang Cui

|

-

Core Competitiveness of Scientific Research in the Era of Large Models, International Conference on MultiLingual Intelligent Information Processing, Beijing, Nov. 2024

-

EgoThink: Evaluating First-Person Perspective Thinking Capability of Vision-Language Models, ZhiDX, Online, Sep. 2024

-

Core Competitiveness of Scientific Research in the Era of Large Models, The Fourth Chinese Conference on Affective Computing, Nanchang, Jul. 2024

-

EgoThink: Evaluating First-Person Perspective Thinking Capability of Vision-Language Models, AITIME, Online, Apr. 2024

-

Advancing Open-source Language Models with Mixed-Quality Data, Next Capital, Online, Mar. 2024

-

Small- and Medium-Scale Foundation Models are Everywhere, Chinese Academy of Sciences, Beijing, China, Mar. 2024

-

OpenChat: Advancing Open-source Language Models with Mixed-Quality Data, Max-likelihood Community, Online, Nov. 2023

-

How to adapt to the pace of research in the era of LLMs, MLNLP Community, Online, Nov. 2023

-

Research trends in the era of Foundation models, Beijing Alumni Association of Fudan University, Beijing, China, Nov. 2023

-

Foundation, Construction, and Application of Knowledge Graph, Tsinghua University, Beijing, China, Jul. 2021

-

Follow Your Heart: My Experience in Computer Science, Microsoft Research Asia, Beijing, China, Mar. 2019

|

-

China Association for Science and Technology’s Young Talents Project, Ph.D. Program - China Computer Federation, China, 2025

-

Annual Best Paper Award, THUMT, 2024

-

1st Place, Tencent Basketball Association, 2024

-

President Award, Institute for AI Industry Research@Tsinghua University, 2024

-

Financial Assistance, Widening Natural Language Processing@EMNLP, 2024

-

Outstanding Paper Award, MFM-EAI Workshop@ICML, 2024

-

Outstanding Master’s Thesis Award, Shanghai Computer Society, 2024

-

Financial Assistance, The Twelfth International Conference on Learning Representations (ICLR), 2024

-

Outstanding Graduate Student, Shanghai, 2023

-

National Scholarship, China, 2021-2022

-

1st Place, Women’s Basketball Graduate School Cup in Fudan University, 2020

|

|